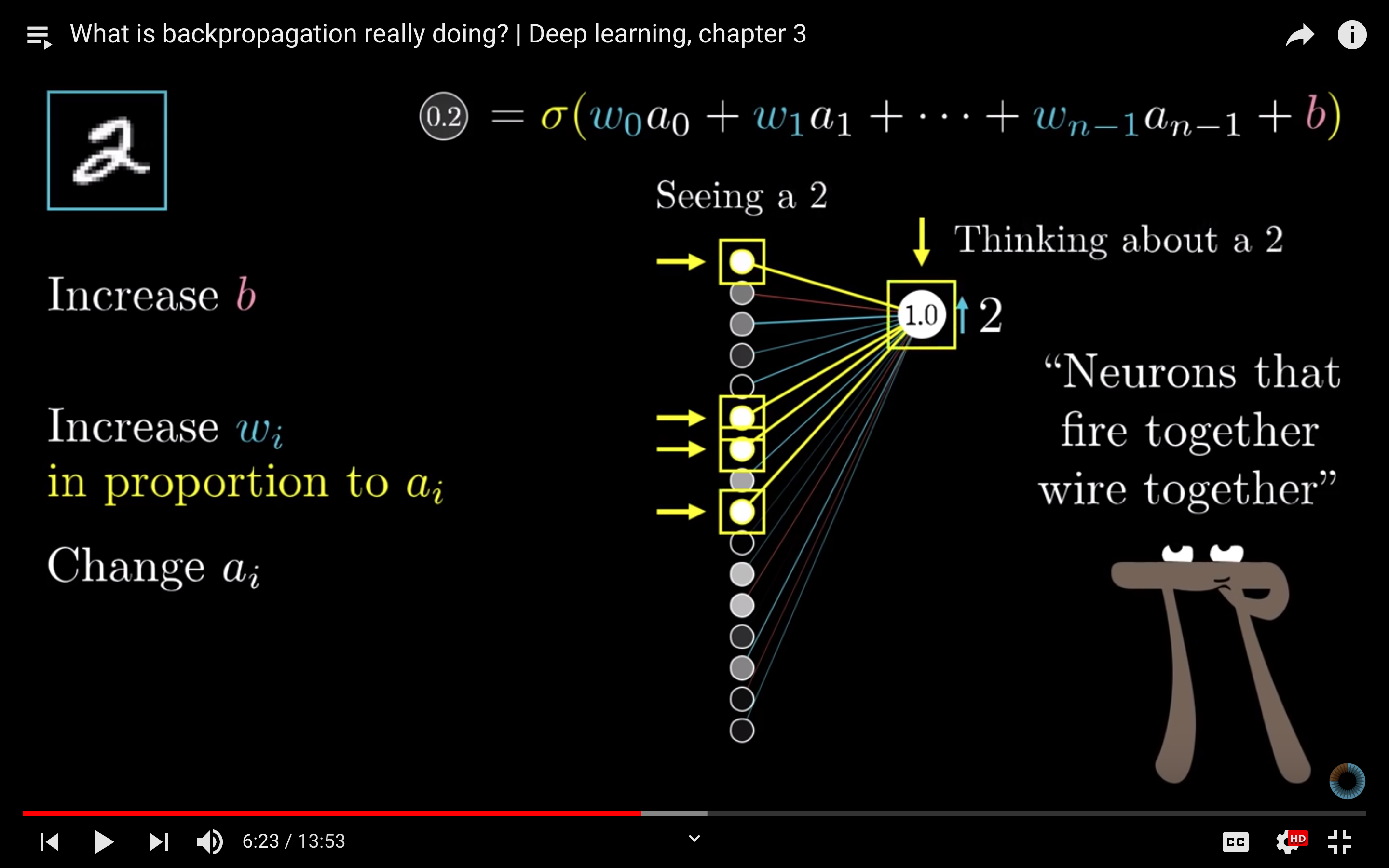

Neurons That Fire Together Wire Together

[

I’ve come back to the 3Brown1Blue YouTube series on Neural Networks a few times now, but only recently took the time to sit down and try to digest them thoroughly. This time around, I find myself wishing that they had been the first stop when I first started digging into deep learning earlier this year. At times, deep learning can feel like just a lot of funny mathematical notation, which in a sense it is, but these videos do a great job of both keeping with that notation, but also granting an intuitive perspective as to what is going on and why, one of the hallmarks of a great teacher.

Just as a small example, when explaining back propagation, he takes the time to mention the potential link to Hebbian theory. In a phrase, “neurons that fire together wire together.” Perhaps it’s a stretch to say that artificial neural nets really mimic the inner workings of a human brain, but it sure does solidify the concept.

Archive

chinese tang-dynasty-poetry 李白 python 王维 rl pytorch numpy emacs 杜牧 spinningup networking deep-learning 贺知章 白居易 王昌龄 杜甫 李商隐 tips reinforcement-learning macports jekyll 骆宾王 贾岛 孟浩然 xcode time-series terminal regression rails productivity pandas math macosx lesson-plan helicopters flying fastai conceptual-learning command-line bro 黄巢 韦应物 陈子昂 王翰 王之涣 柳宗元 杜秋娘 李绅 张继 孟郊 刘禹锡 元稹 youtube visdom system sungho stylelint stripe softmax siri sgd scipy scikit-learn scikit safari research qtran qoe qmix pyhton poetry pedagogy papers paper-review optimization openssl openmpi nyc node neural-net multiprocessing mpi morl ml mdp marl mandarin macos machine-learning latex language-learning khan-academy jupyter-notebooks ios-programming intuition homebrew hacking google-cloud github flashcards faker docker dme deepmind dec-pomdp data-wrangling craftsman congestion-control coding books book-review atari anki analogy 3brown1blue 2fa